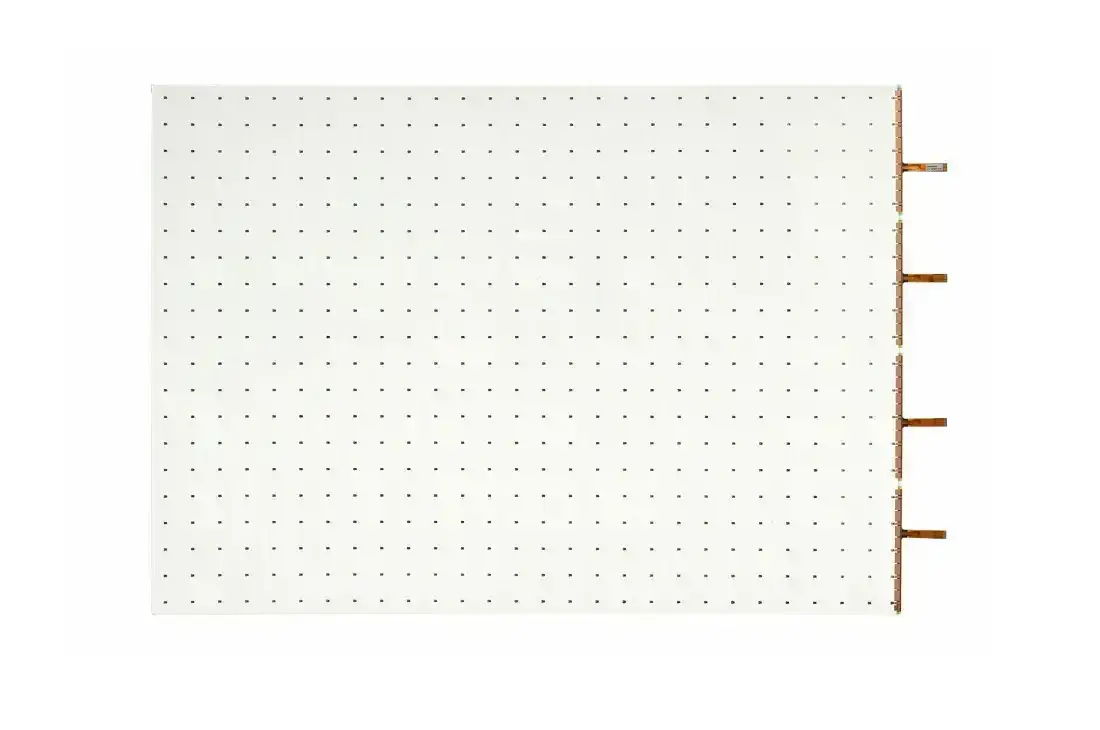

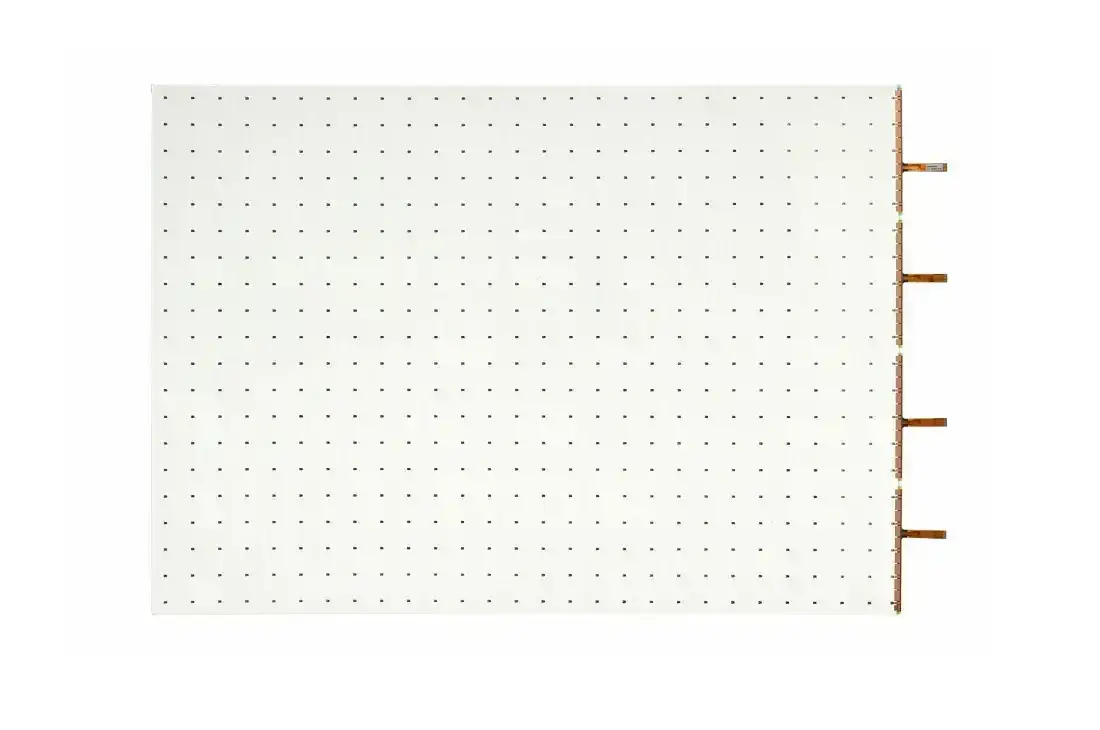

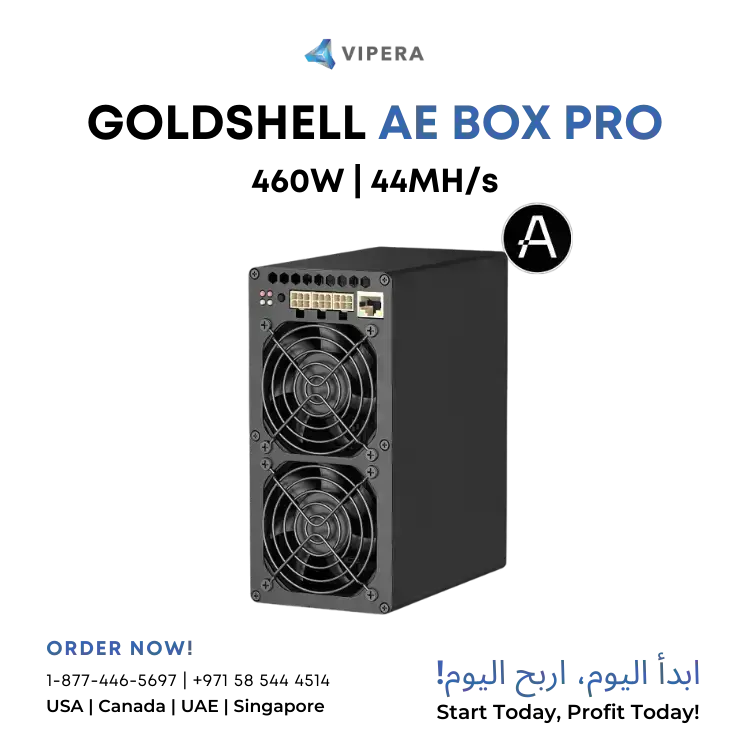

Goldshell AE BOX Home 37MH/s 360W (ALEO)

Exceptional Hashrate: Achieve a powerful 37MH/s, maximizing ALEO mining efficiency.

Low Power Consumption: Operates at just 360W, reducing electricity costs while maintaining high output, operating on 110v-240v input.

zkSNARK Algorithm: Specially optimized for ALEO mining, ensuring compatibility with cutting-edge blockchain technologies.

Quiet Operation: The AE Box is designed with home miners in mind, producing minimal noise without compromising performance.

Compact Design: Stylish and space-saving, it seamlessly fits into your home environment.

Power Supply Not Included: The miner is sold without a PSU. For optimal performance, select any high-quality 650W or higher modular PSU with sufficient efficiency and stability.

User-Friendly Setup: The AE Box is easy to configure, making it accessible to all levels of miners.

Affordable and Efficient: Designed for budget-conscious miners who seek high returns.

Home-Friendly Design: Quiet and compact, it’s ideal for residential settings.

Future-Proof Mining: Mines ALEO using the advanced zkSNARK algorithm, staying ahead of blockchain advancements.

Low power + Low Noise, fast ROI within 4 months ONLY (at time of writing)

Warranty: 6 months manufacturer parts or replace

$479.00

$2,995.00

Save 84%